From the first electric guitar to the MTV music video boom, musicians have always embraced new ways to connect with their audiences. Now, in a digital-first world, that spirit of innovation is stronger than ever. Holograms, virtual worlds, AI-powered tools, and smart technologies are opening up fresh possibilities for creativity, sound, and spectacle.

New ways to collaborate

Technology has completely reshaped how artists work together. With digital audio workstations (DAWs) and cloud-based platforms like Ableton Live, Pro Tools, and Splice, musicians can collaborate in real time from anywhere in the world. Massive libraries of sounds and samples make it easy to experiment, spark ideas, and push creativity further. This accessibility doesn’t just speed things up – it also amplifies diverse voices, unlocking collaborations that might never have happened ten years ago.

Setting the stage

Performance tech is redefining live music experiences. On his DAMN. tour, Kendrick Lamar used projection mapping to make his stage split, collapse, and transform in real time. Coldplay lit up stadiums with Xylobands – LED wristbands that synced perfectly with their music. And in Las Vegas, the MSG Sphere’s record-breaking wraparound LED display turns an entire venue into a living canvas. With innovations like these, the audience becomes part of the show itself.

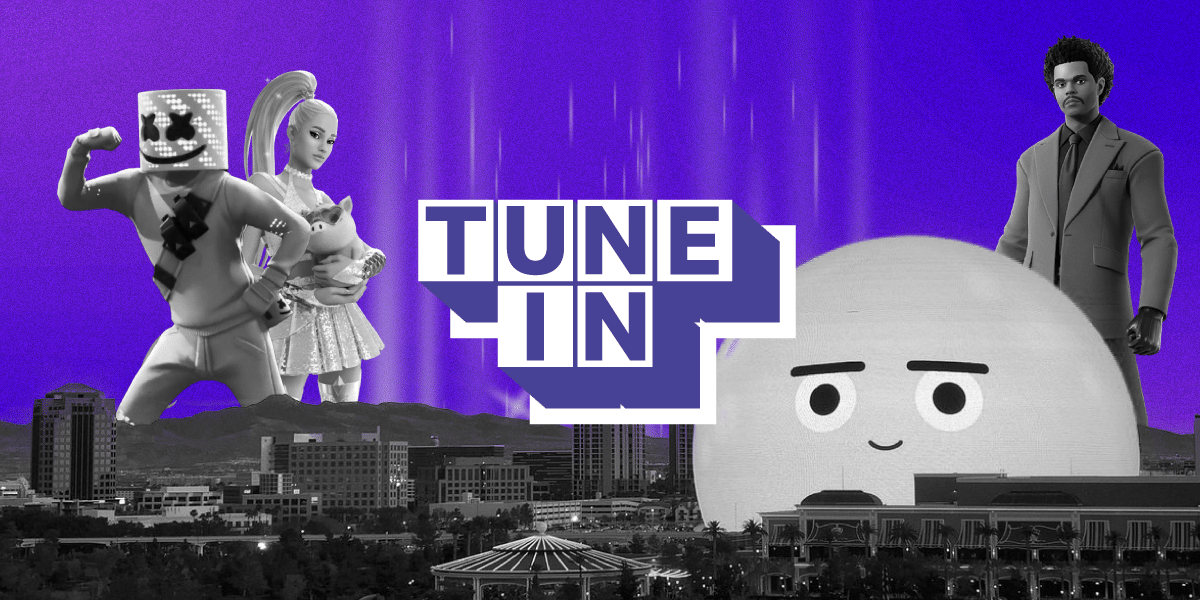

Performing in virtual spaces

Concerts no longer need a physical stage. Jack Harlow and Doja Cat have stepped into the metaverse with Roblox performances, while Travis Scott’s Fortnite show drew over 12 million virtual attendees – an event that felt as much like gaming as it did music. These kinds of interactive performances are reshaping what it means to ‘go to a gig,’ blending music, tech, and culture in exciting new ways.

Embracing AI

AI is changing both how music is made and how it’s experienced. From streamlining music production fundamentals like generating chord progressions and dialling in EQ and compression settings, to using stem separation to unlock new possibilities for remixing, AI is enhancing creative workflows. Streaming platforms like Spotify and Apple Music use AI to personalise playlists, while generative tools like Suno, Magenta RealTime, and Lyria RealTime let artists co-create with AI, producing music that shifts and evolves live.

Did you know? In 2023, BIMM partnered with DAACI, an innovative AI music software company that creates tools and technologies to enhance the creative process of making music. DAACI provided important internship opportunities and masterclasses, encouraging our students to think about the impact of AI, the ethics surrounding it, and its application in the creative industries. By taking this approach, we aim to better prepare our graduates to be at the forefront of the next generation of music professionals.

Augmented live performances

Aphex Twin’s live shows have become legendary not just for their sound, but for their visuals. Working with long-time collaborator Weirdcore, he transforms venues into sensory mazes of projection mapping, warped faces, flickering typography, and chaotic layers of imagery. The result is an audiovisual assault where music and visuals feel inseparable –less like a concert and more like stepping inside a living, unstable digital organism.

The next frontier

From AI-powered production to virtual arenas, the future of music is a space where imagination meets innovation. At BIMM, we’ll give you everything you need to stay ahead of the curve – whether that’s mastering the tools shaping today’s industry or experimenting with the ones that will define tomorrow.

Discover our courses and see how we can help you turn your passion into a future career in music.